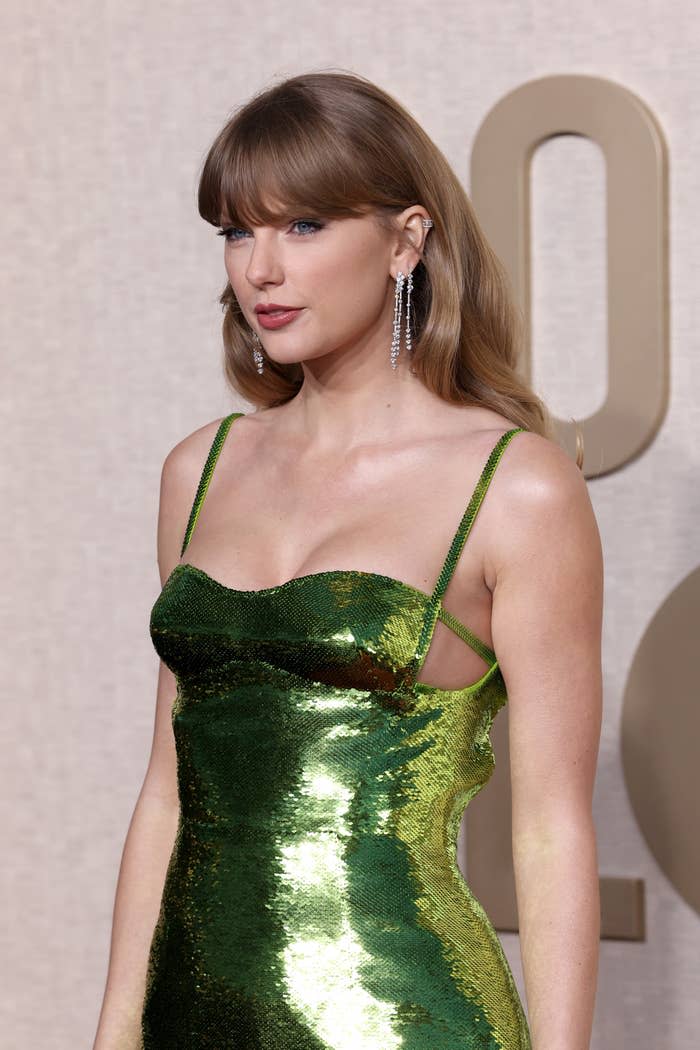

After Sexually Explicit AI Images Of Taylor Swift Circulated Online, SAG-AFTRA And The White House Issued Statements

If you've been online the last few days, it's possible — not necessarily likely, but possible — that you've seen AI-generated, sexually explicit images of Taylor Swift on social media.

Obviously, we're not going to share them here — and we're going to avoid sharing reactions as well, because that might lead to the images being more widely shared.

But the occurrence is only the latest example of technology being used to inflict sexual violence against women in methods of deepfake pornography. It's misogynistic and disgusting, and more proof that technological advances such as AI need to be highly regulated to protect the safety of people online.

Apparently, people are feeling similarly. SAG-AFTRA issued a statement calling the images "upsetting, harmful, and deeply concerning."

"The development and dissemination of fake images — especially those of a lewd nature — without someone’s consent must be made illegal," the organization said. "As a society, we have it in our power to control these technologies, but we must act now before it is too late."

"SAG-AFTRA continues to support legislation by US Rep. Joe Morelle, the Preventing Deepfakes of Intimate Images Act, to make sure we stop exploitation of this nature from happening again. We support Taylor and women everywhere who are the victims of this kind of theft of their privacy and right to autonomy."

White House Press Secretary Karine Jean-Pierre also said that the Oval Office is not pleased with the images circulating. "We are alarmed by the reports of the…circulation of images that you just laid out — of false images to be more exact, and it is alarming," she told ABC News.

"While social media companies make their own independent decisions about content management, we believe they have an important role to play in enforcing their own rules to prevent the spread of misinformation, and non-consensual, intimate imagery of real people."

You can read more about the damaging effects of deepfake pornography here.

If you've been the target of revenge porn, the Federal Trade Commission has outlined some resources available to you, as well as some steps you can take to protect yourself.